Day 3: Testing existing Machine Learning Frameworks

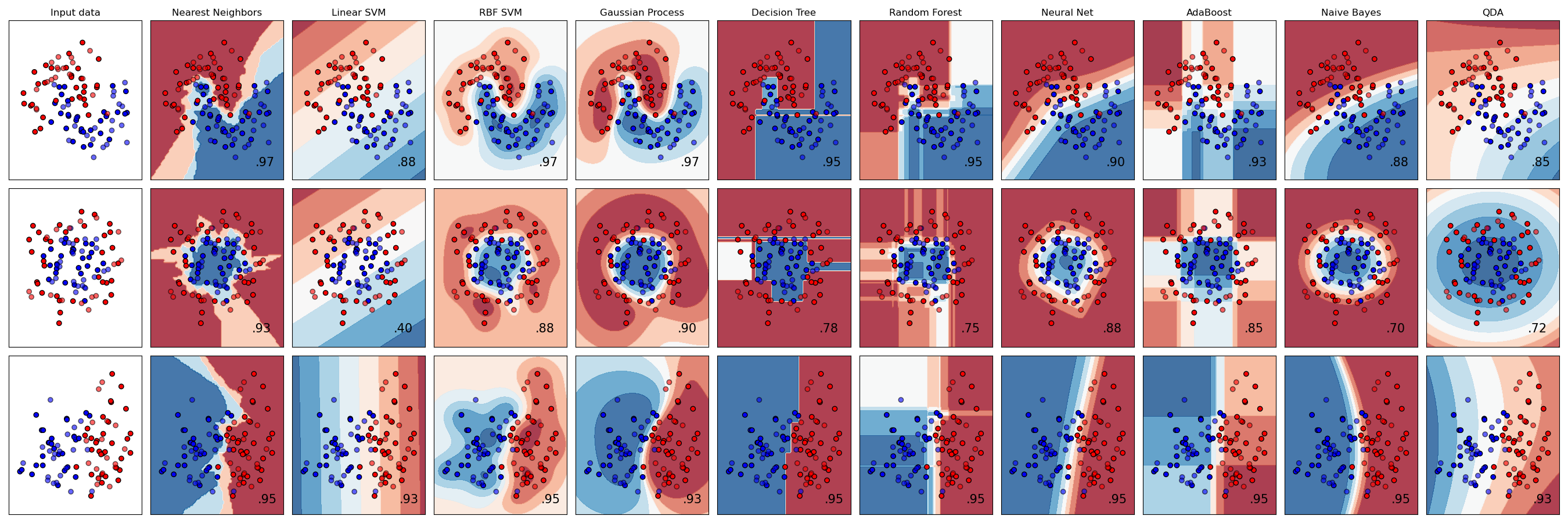

I started off today by learning more linear algebra and some classifiers used to group data. I learned about vector transpositions and their use in making vector more usable depending on your data set. After that Tyler and Kushal gave me a quick overview of certain classifiers. I learned about Nearest Neighbors first, as it is simplistic. Nearest neighbor just chooses the data point closest to the one you are trying to classify and sets its value to the value of that data point. I have a visual below that demonstrates how nearest neighbor and other classifiers classify data. After learning the general concepts of classification I was sent to implement them on my own. Despite some struggle initially I was able to create a program that successfully implemented: Nearest Neighbors, Linear SVM, RBF SVM, Gaussian Process, and a MLP Neural Net. These are all very useful models but only the MLP Neural Net used deep learning. All of these models have different methods of classification and that is well visualized in the image below. Being able to successful implement these methods in Python was challenging but rewarding.

Visual of classification models:

Source: http://scikit-learn.org/stable/auto_examples/classification/plot_classifier_comparison.html

Source: http://scikit-learn.org/stable/auto_examples/classification/plot_classifier_comparison.html

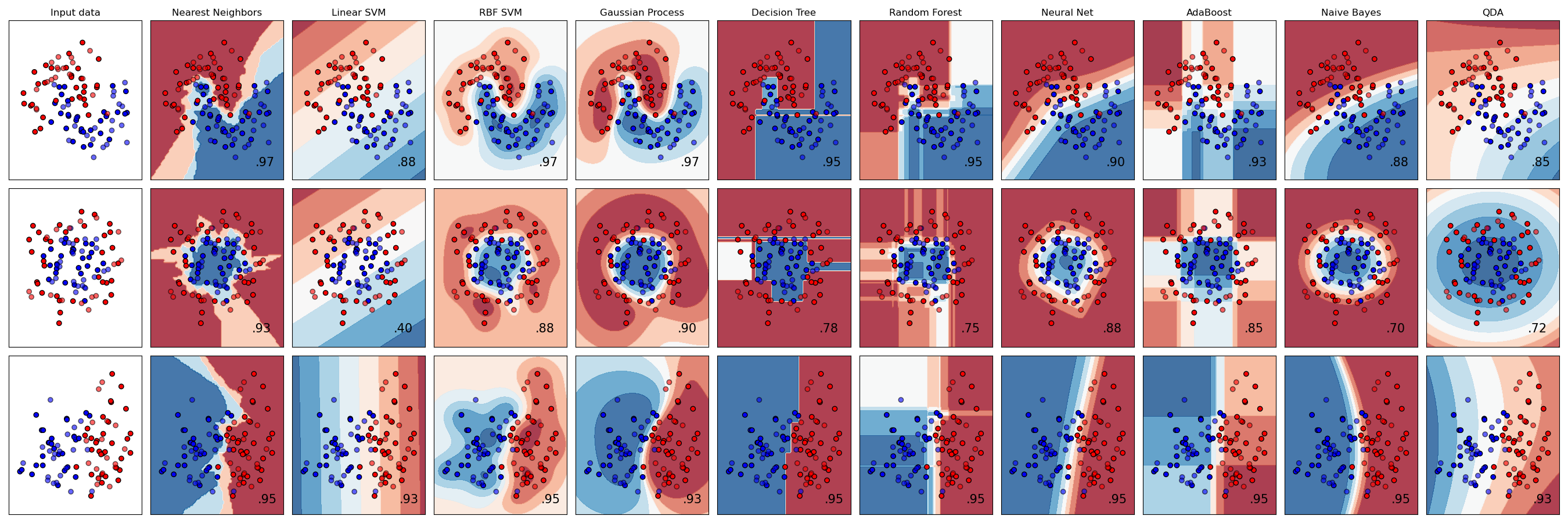

Visual of classification models:

Source: http://scikit-learn.org/stable/auto_examples/classification/plot_classifier_comparison.html

Source: http://scikit-learn.org/stable/auto_examples/classification/plot_classifier_comparison.html

Comments

Post a Comment