Day 4: Stanford Lecture and the softmax Function

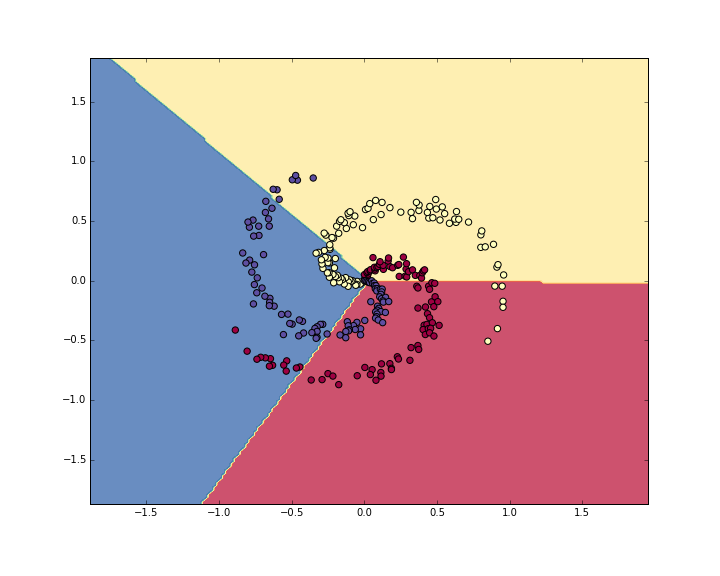

Today I watched a Stanford lecture on loss in machine learning and cost optimization function. Loss functions are a crucial part of neural networks. I learned the basics of feed forward neural networks from a helpful web series: (https://www.youtube.com/watch?v=ZzWaow1Rvho&list=PLxt59R_fWVzT9bDxA76AHm3ig0Gg9S3So) . After watching the YouTube series I switched to the Stanford lectures to learn more on loss functions. The purpose of a loss function is to update your neural networks classifying function. By giving your predicted result and comparing it to the actual result you are able to tell where your model went wrong and you can then update it to improve over the next iteration. I learned about hinge loss and the squared loss function which are two different ways to calculate your error. Using this knowledge I was able to begin to explore the soft max function. I don't fully understand the math behind it today, and I will continue to look into it tomorrow. The image below is a visualization of a neural net that used soft max . Today I learned an incredible amount about feed forward neural networks how they utilize loss functions in order to update their predictions.

Source: http://cs231n.github.io/neural-networks-case-study/

Source: http://cs231n.github.io/neural-networks-case-study/

Comments

Post a Comment